Computer predicts brain activity associated with different objects

Think of celery, an airplane or a dog. Each of these words, along with the thousands of others in the English language, create a different and unique pattern of activity in your brain. Now, a team of scientists has developed the first computer programme that can predict these patterns for concrete nouns – tangible things that you can experience with your senses.

With an accuracy of around 70%, the technique is far from perfect but it’s still a significant technological step. Earlier work may have catalogued patterns of brain activity associated with categories of words, but this is the first to predict activity using a computer model. More fundamentally and perhaps more importantly, it sheds new light on how our brains organise and represent knowledge.

Tom Mitchell and colleagues from Carnegie Mellon University built their model by using a technique called functional magnetic resonance imaging (fMRI) to visualise the brain activity of nine volunteers, as they concentrated on 60 different nouns. This ‘training set’ consisted of five words from each of 12 categories, such as animals, body parts, tools and vehicles.

Mitchell analysed how these words are used with the help of a “text corpus“, a massive set of texts containing over a trillion words. A text corpus reflects how words are typically used in the English language. Linguists have used these tools to show that a word’s meaning is captured to some extent by other words and phrases that it frequently appears next to.

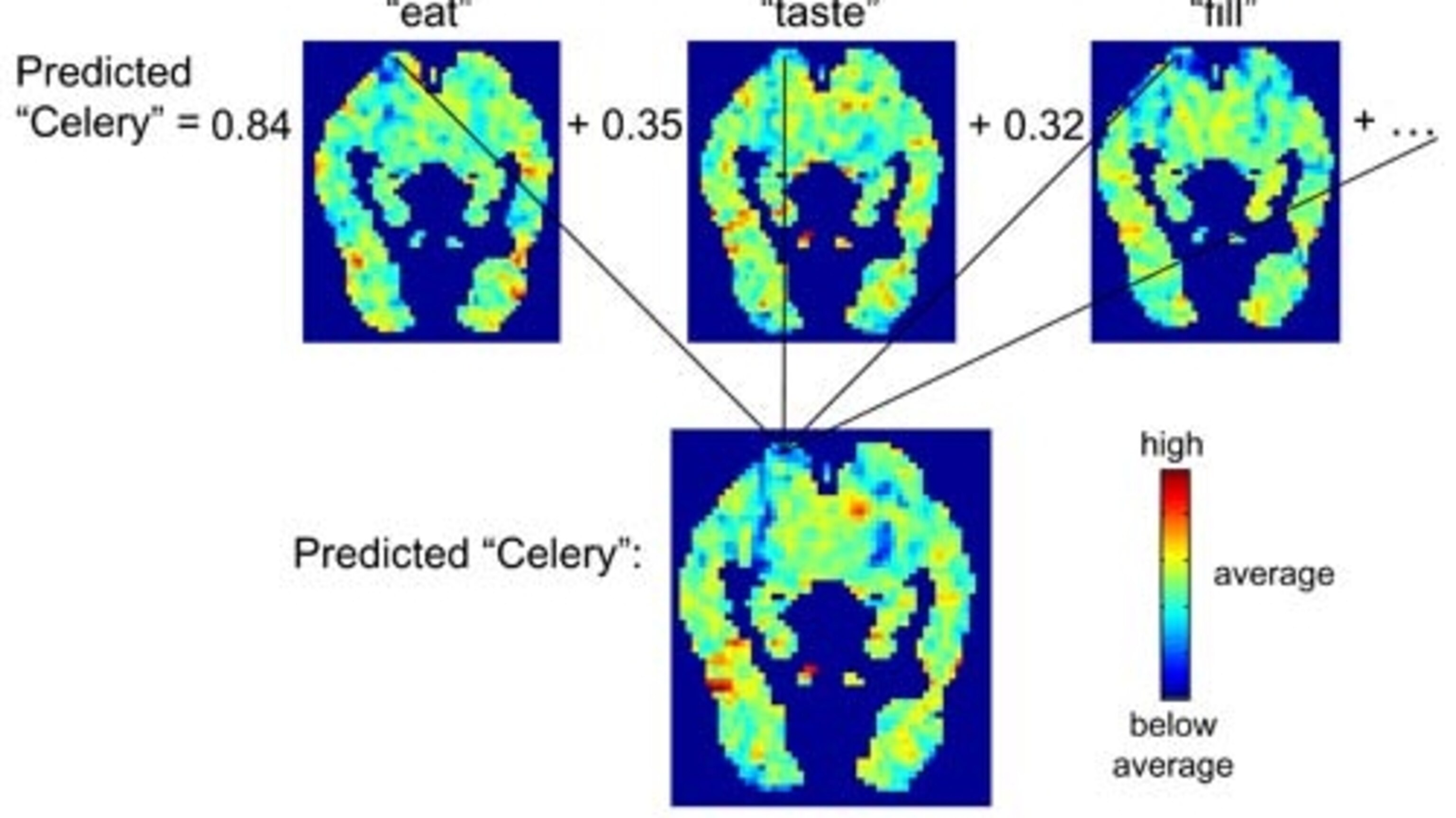

With the corpus, Mitchell worked out how often the 60 nouns occur next to 25 verbs, including “see”, “hear”, “taste”, “enter” and “drive”. All of them are related to sensation and movement because other studies have suggested that objects are encoded in the brain in terms of how you sense them and what you can do with them.

The model effectively ‘describes’ nouns in terms of their relationship to these 25 key verbs, and the volunteers’ fMRI images revealed how these relationships affect neural activity in different parts of the brain. With this information, the programme can predict the activity patterns triggered by thousands of other nouns that it hasn’t seen before. Using the text corpus, it works out how each new noun relates to the 25 master-verbs. It then adds up the fMRI features predicted by these relationships into one combined whole.

Three trials

To begin with, the team challenged the model with an easy task. They trained it using only 58 of the 60 words, forced it to predict the activation patterns for the remaining two, and asked it to match these predicted images to the actual ones. The work is reminiscent of another recent study that I blogged about, involving a decoder that can accurately work out the image that someone is looking at based solely on their brain activity.

On average, Mitchell’s model successfully matched word to image 77% of the time, far better than would be expected by chance. And a large proportion of the error was due to inconsistencies in the fMRI images, caused by the volunteers moving their heads within the scanners.

The model could even predict brain activity for categories of nouns outside the training set. Mitchell tested for this by retraining it using words from just 10 of the 12 categories, and testing it with words from the excluded groups. For example, he could have taken out vegetable and vehicle words, and tested the model using ‘celery’ and ‘airplane’. Despite the more difficult task, the model still achieved a respectable score of 70%. Even when faced with two words from the same category such as ‘celery’ and ‘corn’, which presumably would be harder to distinguish, the model had an accuracy of 62%. That’s just above the cut-off for a chance finding.

In the hardest trial yet, the model had to pick the word that corresponded to a particular fMRI image, from a set of over a thousand. Mitchell trained it using 59 words and showed it an fMRI image of the 60th, along with 1001 possible candidates drawn from the most common words in the text corpus. The programme predicted an fMRI image for every single one of these possibilities and compared them to the target image. Amazingly, it gave an accurate answer 72% of the time.

Lessons

The model’s success shows that it applies across a massive range of different words with an even greater variety of meanings. The 25 master verbs, all related to sensing and moving, are the key to this. Mitchell also tested 115 alternative sets of 25 common words randomly drawn from the corpus, but none of the models they generated came close to the accuracy of the original. On average, they only made accurate predictions about 60% of the time.

This suggests that sensory-motor verbs act as fundamental building blocks for representing the meanings of objects. It says a lot about how we think about physical things. An rose is as much defined by how it smells, looks or feels as it is by a description in a dictionary. The fact that these individual aspects can be combined into a predictive whole suggests that an object is partly defined by the sum of everything you can sense about it and do with it.

This isn’t just a philosophical point; it’s reflected at a neurological level too. According to the fMRI images, the degree to which a noun occurs with the word ‘eat’ predicts the amount of activity it triggers in the gustatory cortex, a part of the brain involved in taste. How commonly a noun appears next to ‘push’ affects the activity of the postcentral gyrus, which is involved in planning movements. The list goes on and on.

But that’s not the whole story. Mitchell also found strong activity in parts of the brain that have nothing to do with sensory or motor functions, and everything to do with long-term memory and planning. The model’s predictions are also more accurate for the left half of the brain than the right. That’s to be expected; after all, previous studies suggest that the left hemisphere has a greater role in representing the meaning of words.

This study is only the start for Mitchell’s work. If concrete nouns are linked to senses and actions, how does the brain represent the meaning of intangible abstract nouns, like love or fear? How does it deal with other parts of speech like prepositions and adjectives, or with combinations of words like phrases and sentences?

Reference: Science doi:10.1126/science.1152876

Image: courtesy of Science

The 25 master verbs: see, hear, listen, taste, smell, eat, touch, rub, lift, manipulate, run, push, fill, move, ride, say, fear, open, approach, near, enter, drive, wear, break, clean. Note that lol and pwn were not on the list.

Related Topics

Go Further

Animals

- Octopuses have a lot of secrets. Can you guess 8 of them?

- Animals

- Feature

Octopuses have a lot of secrets. Can you guess 8 of them? - This biologist and her rescue dog help protect bears in the AndesThis biologist and her rescue dog help protect bears in the Andes

- An octopus invited this writer into her tank—and her secret worldAn octopus invited this writer into her tank—and her secret world

- Peace-loving bonobos are more aggressive than we thoughtPeace-loving bonobos are more aggressive than we thought

Environment

- This ancient society tried to stop El Niño—with child sacrificeThis ancient society tried to stop El Niño—with child sacrifice

- U.S. plans to clean its drinking water. What does that mean?U.S. plans to clean its drinking water. What does that mean?

- Food systems: supporting the triangle of food security, Video Story

- Paid Content

Food systems: supporting the triangle of food security - Will we ever solve the mystery of the Mima mounds?Will we ever solve the mystery of the Mima mounds?

- Are synthetic diamonds really better for the planet?Are synthetic diamonds really better for the planet?

- This year's cherry blossom peak bloom was a warning signThis year's cherry blossom peak bloom was a warning sign

History & Culture

- Strange clues in a Maya temple reveal a fiery political dramaStrange clues in a Maya temple reveal a fiery political drama

- How technology is revealing secrets in these ancient scrollsHow technology is revealing secrets in these ancient scrolls

- Pilgrimages aren’t just spiritual anymore. They’re a workout.Pilgrimages aren’t just spiritual anymore. They’re a workout.

- This ancient society tried to stop El Niño—with child sacrificeThis ancient society tried to stop El Niño—with child sacrifice

- This ancient cure was just revived in a lab. Does it work?This ancient cure was just revived in a lab. Does it work?

- See how ancient Indigenous artists left their markSee how ancient Indigenous artists left their mark

Science

- This 80-foot-long sea monster was the killer whale of its timeThis 80-foot-long sea monster was the killer whale of its time

- Every 80 years, this star appears in the sky—and it’s almost timeEvery 80 years, this star appears in the sky—and it’s almost time

- How do you create your own ‘Blue Zone’? Here are 6 tipsHow do you create your own ‘Blue Zone’? Here are 6 tips

- Why outdoor adventure is important for women as they ageWhy outdoor adventure is important for women as they age

Travel

- Slow-roasted meats and fluffy dumplings in the Czech capitalSlow-roasted meats and fluffy dumplings in the Czech capital

- Want to travel like a local? Sleep in a Mongolian yurt or an Amish farmhouseWant to travel like a local? Sleep in a Mongolian yurt or an Amish farmhouse

- Sharing culinary traditions in the orchard-filled highlands of JordanSharing culinary traditions in the orchard-filled highlands of Jordan